research interests

I’m broadly interested in statistics, machine learning, and optimization. I’m also interested in lots of applications: to finance, operations research, public policy, social good, sustainability, epidemiology, healthcare, autonomous vehicles, analytics, …. A lot of my recent work has focused on building reliable and trustworthy machine learning systems, by taking a close look at the (many) statistical and computational issues that arise after a machine learning model has been deployed into real-world systems and scientific applications.

On a more technical level, I’m interested in – and have recently worked on projects related to – the following areas:

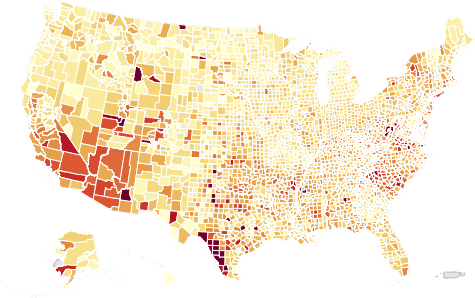

- distribution shift, robust optimization, subpopulation-level performance, responsible AI

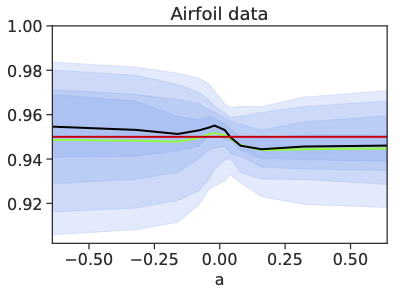

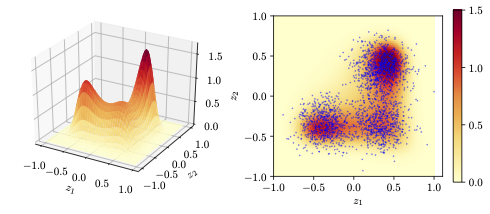

- conformal inference, distribution-free uncertainty quantification

- weak supervision

- tuning parameter-free stochastic optimization

- implicit regularization

- sparse regression

- large-scale multiple testing

- risk estimation, model selection

representative publications

Here are a few (representative) publications related to the above topics:

all publications

- The Lifecycle of a Statistical Model: Model Failure Detection, Identification, and RefittingJournal of Machine Learning Research (under review), 2023

#subpopulation-level performance#responsible AI#conformal inference#weak supervision#large-scale multiple testing - Predictive Inference with Weak SupervisionJournal of Machine Learning Research (under review), 2022

#conformal inference#weak supervision#responsible AI - A Comment and Erratum on "Excess Optimism: How Biased is the Apparent Error of an Estimator Tuned by SURE?"Journal of the American Statistical Association (under review), 2022

#risk estimation#model selection - Minimum-Distortion EmbeddingFoundations and Trends in Machine Learning, 2021

#representation learning#convex optimization - Minimizing Oracle-Structured Composite FunctionsOptimization and Engineering, 2021

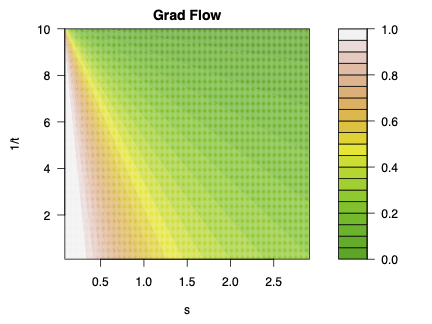

#stochastic optimization - Accelerated Gradient Flow: Risk, Stability, and Implicit Regularization2021

#implicit regularization#convex optimization - Computationally Efficient Posterior Inference With Langevin Monte Carlo and Early Stopping2021

#implicit regularization#Markov chain Monte Carlo - Robust Validation: Confident Predictions Even When Distributions ShiftJournal of the American Statistical Association, 2021

#distribution shift#robust optimization#conformal inference#responsible AI - The Implicit Regularization of Stochastic Gradient Flow for Least SquaresInternational Conference on Machine Learning (ICML), 2020

#implicit regularization#stochastic optimization - Confidence Bands for a Log-Concave DensityJournal of Computational and Graphical Statistics, 2020

#uncertainty quantification#convex optimization - A Continuous-Time View of Early Stopping for Least SquaresInternational Conference on Artificial Intelligence and Statistics (AISTATS), 2019

#implicit regularization#convex optimization - The Generalized Lasso Problem and UniquenessElectronic Journal of Statistics, 2019

#sparse regression#convex optimization - Communication-Avoiding Optimization Methods for Distributed Massive-Scale Sparse Inverse Covariance EstimationInternational Conference on Artificial Intelligence and Statistics (AISTATS), 2018

#sparse inverse covariance estimation#convex optimization#sparse regression - A Semismooth Newton Method for Fast, Generic Convex ProgrammingInternational Conference on Machine Learning (ICML), 2017

#convex optimization - Generalized Pseudolikelihood Methods for Inverse Covariance EstimationInternational Conference on Artificial Intelligence and Statistics (AISTATS), 2017

#sparse inverse covariance estimation#convex optimization#sparse regression - The Multiple Quantile Graphical ModelAdvances in Neural Information Processing Systems 29 (NeurIPS), 2016

#sparse inverse covariance estimation#convex optimization#sparse regression - Disciplined Convex Stochastic Programming: A New Framework for Stochastic OptimizationProceedings of the 31st Conference on Uncertainty in Artificial Intelligence (UAI), 2015

#stochastic optimization - Active Learning With Model SelectionAAAI Conference on Artificial Intelligence (AAAI), 2014

#model selection - Experiments With Kemeny Ranking: What Works When?Mathematical Social Sciences, 2012

#ranking - Learning Lexicon Models from Search Logs for Query ExpansionJoint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL), 2012

#ranking - Preferences in College Applications: A Nonparametric Bayesian Analysis of Top-10 RankingsNeural Information Processing Systems (NeurIPS) Workshop on Computational Social Science, 2010

#ranking#Markov chain Monte Carlo